Distinguishing Between Human and AI Responses in Assessments

Overview

- The rise of generative AI is reshaping educational assessments, raising critical questions about integrity and effectiveness.

- Item Response Theory (IRT) presents a revolutionary technique for accurately differentiating between human and AI-generated responses.

- Understanding these distinctions is vital for developing valid assessments that genuinely reflect student learning.

The Disruption of AI in Education

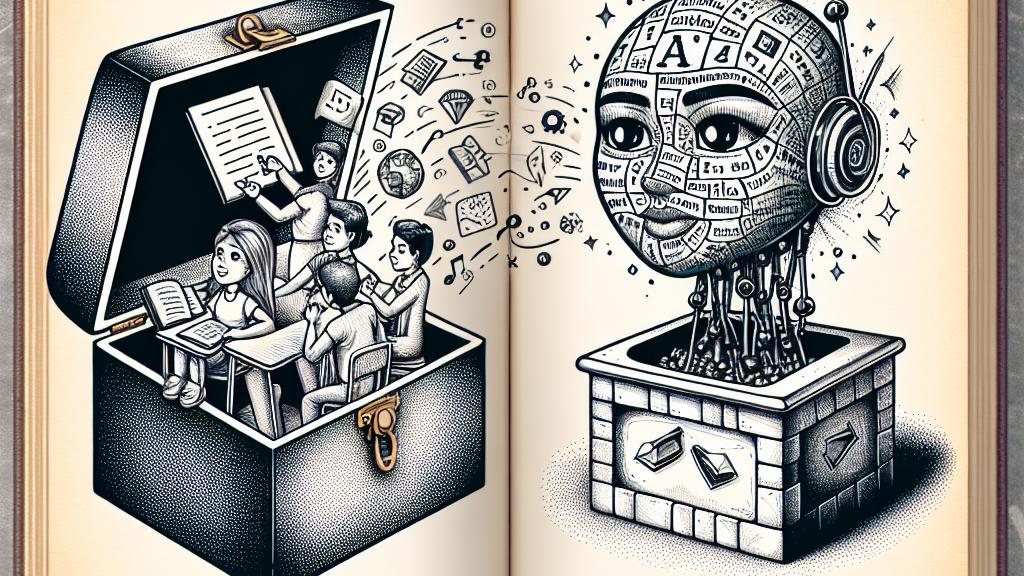

Generative AI tools, such as ChatGPT and Claude, are transforming how students approach their studies. For instance, imagine a scenario where a high school student, feeling overwhelmed with preparation for a math exam, decides to leverage an AI tool to generate answers for multiple-choice questions. While this might seem harmless at first, it opens a Pandora's box of ethical dilemmas concerning assessment integrity. As AI becomes more prevalent, educators face an urgent challenge: how to ensure that their assessments remain reliable measures of student learning. The consequences of not addressing this can be profound, potentially undermining the trust in educational systems and the value of academic credentials.

Harnessing the Potential of Item Response Theory (IRT)

Item Response Theory (IRT) emerges as a cutting-edge solution to this pressing issue. At its core, IRT operates on the understanding that humans and AI exhibit distinct response patterns to assessments. For example, the research conducted by Alona Strugatski and Giora Alexandron reveals that an AI's reasoning process lacks the nuance that human responses typically convey. Through a technique called Person-Fit Statistics, their study skillfully identified these critical differences, offering a newfound clarity in detecting AI-generated answers. This discovery not only enhances the academic discourse around assessment validation but also empowers educators with a tool to preserve the integrity of their evaluations in this new era.

Reimagining Assessments for Authentic Learning Experiences

With insights from IRT in hand, educators have a unique opportunity to revolutionize the way assessments are structured. Imagine transforming traditional test questions into stimulating scenarios that provoke critical thinking; instead of asking, "What are the main causes of climate change?", an educator might frame the query as, "As a member of an international summit, how would you approach the issue of climate change and persuade other countries to take action?" Such questions not only challenge students to think deeply but also complicate the task for AI, making it less feasible for it to provide satisfactory responses. Moreover, engaging assessments that encourage discussion, collaboration, and application of knowledge can enrich the learning environment, ensuring students develop the skills they need for the future while also mitigating the potential for AI-driven cheating.

Loading...