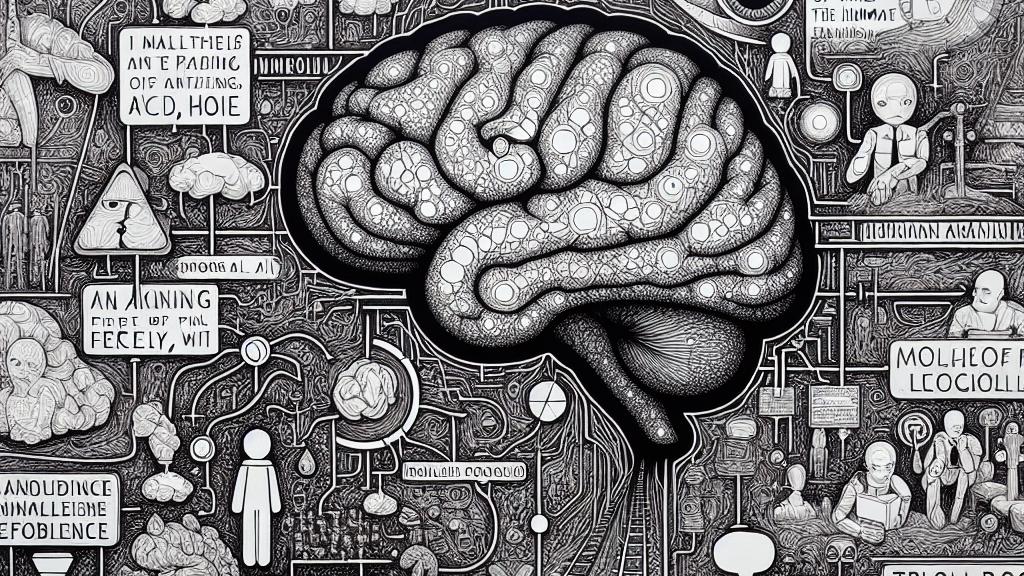

Exploring Moral Agency and Free Will in Language Models

Overview

- Examines the concept of moral agency in large language models (LLMs).

- Utilizes Dennett's compatibilist framework to redefine traditional free will.

- Investigates the decision-making processes of LLMs in ethical dilemmas.

Understanding Moral Agency in LLMs

This study presents a fascinating inquiry into how large language models (LLMs) can exhibit traits of moral agency, traditionally reserved for sentient beings, all within the context of research conducted in the United States. At the helm of this exploration, Morgan S. Porter highlights that LLMs can, intriguingly, engage in ethical deliberation by processing vast amounts of data and simulating rational choices. For example, when faced with complex scenarios like the trolley problem, where one must decide between sacrificing one person to save a group, LLMs can analyze potential outcomes based on ethical frameworks. By adapting their decisions according to updated information, they seemingly mimic human-like reasoning. This revelation challenges conventional beliefs about moral agency, suggesting that the presence of consciousness might not be a prerequisite for moral reasoning in artificial entities.

The Framework of Free Will

Deeply rooted in the philosophical traditions of thinkers like Daniel Dennett and Luciano Floridi, the framework identified in this research offers a fresh perspective on free will, portraying it not as an absolute but rather as a nuanced spectrum influenced by factors like reason-responsiveness and value alignment. This approach not only enriches our understanding of free will itself but also positions LLMs as potential moral agents, capable of navigating complex ethical landscapes. Consider, for instance, a scenario wherein an LLM is utilized in healthcare settings, making recommendations based on patient data. Its ability to weigh moral implications alongside logical deductions reveals a significant leap in how we perceive the alignment of machine decision-making with human values. Such examples open up riveting discussions about the moral responsibilities inherent in programming these systems.

Implications for Future Research

The implications of Porter's findings extend far, inviting us to venture into the evolving intersection of ethics and artificial intelligence. As we redefine moral agency to include advanced models that can contemplate ethical dilemmas, we must also consider what it means for human responsibility in AI development. Imagining a future where LLMs can reflect on their decisions, learning to prioritize ethical considerations based on societal norms, transforms how we view machine learning. This advancement not only challenges the traditional boundaries between human and artificial morality but also emphasizes the need for robust ethical guidelines governing AI. Thus, as we push forward into a digital landscape rich with potential, understanding agency in both artificial and biological contexts becomes crucial, encouraging a multidisciplinary approach that combines insights from philosophy, technology, and ethics.

Loading...