Understanding LLM Interpretability with Sparse Autoencoders in Llama 3.2

Overview

- Immerse yourself in the fascinating world of mechanistic interpretability through Sparse Autoencoders.

- Unveil how Llama 3.2 redefines the capabilities of modern language models.

- Tap into a diverse collection of resources and tools to enhance your implementation journey.

Introduction to LLM Interpretability

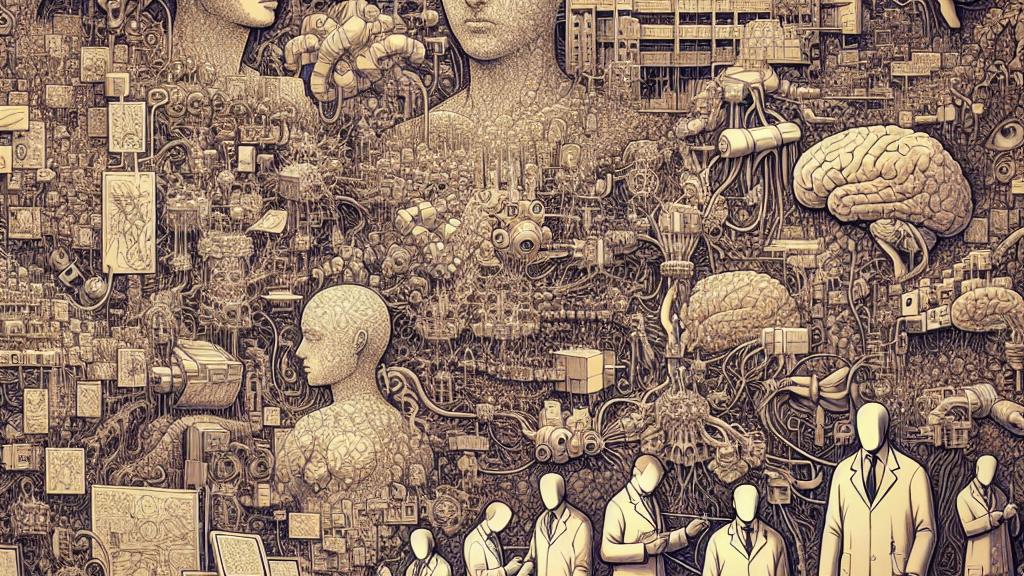

In the remarkable realm of artificial intelligence, understanding how large language models (LLMs) operate has become a pressing concern. This is where Llama 3.2 steps into the spotlight, showcasing not just advanced technology but also the power of interpretability. At its heart lies the innovative concept of Sparse Autoencoders (SAEs). Picture these SAEs as expert guides, meticulously unraveling the complexities of neuron activations, much like skilled detectives revealing hidden truths. They pave the way for us to comprehend how decisions are made by the model. Such insights stem from leading research organizations like OpenAI, Anthropic, and Google DeepMind, whose findings enhance our grasp of mechanistic interpretability and its essential role in the evolving landscape of AI.

The Role of Sparse Autoencoders in Llama 3.2

The integration of Sparse Autoencoders into Llama 3.2 represents a monumental stride toward clearer AI understanding. Imagine entering an art gallery filled with abstract paintings, where each neuron resembles a different brushstroke. Instead of a chaotic interpretation where strokes blend together, SAEs clarify and refine these strokes, allowing each activation to articulate a unique concept. Take for example the model’s task in sentiment analysis: ideally, when a neuron corresponds to happiness, it should convey that emotion unequivocally, devoid of the surrounding connotations of sadness or anger. This targeted focus enables a transformative experience where Llama 3.2 not only enhances interpretability but also boosts practical applicability in areas like educational tools and creative writing aids. The meticulously designed pipeline, which captures subtle activations and refines feature interpretation, underlines Llama 3.2’s journey, crafted in the versatile environment of PyTorch, making it accessible for both seasoned developers and enthusiastic newcomers looking to dive into advanced AI.

Finding Resources and Future Directions

As we navigate this thrilling journey into LLM interpretability, it's essential to acknowledge the treasure trove of resources available to both enthusiasts and experts alike. Consider the 'Awesome LLM Interpretability' repository—a gem filled with tutorials, insightful research papers, and specialized libraries tailored to illuminate the path for anyone wishing to delve deeper into this vital field. These resources not only empower researchers with essential tools but also ignite innovative ideas on how to solve the multifaceted puzzles of machine learning. Furthermore, the future looks promising; as this field matures, we anticipate groundbreaking insights that will shed light on AI behavior and highlight its ethical implications. With continuous innovations and fresh tools on the horizon, the adventure of deciphering machine intelligence remains invigorating, dynamic, and inexhaustibly engaging—captivating all who are eager to explore the potentials of AI and its applications.

Loading...