Unlocking the API Mysteries: NESTFUL and the Next Generation of LLMs!

Overview

- NESTFUL introduces a groundbreaking approach to evaluating LLMs by specifically focusing on their ability to handle nested API calls.

- Featuring 300 carefully curated examples, the benchmark distinguishes between executable and non-executable API sequences.

- The findings highlight significant performance gaps, underscoring the pressing need for improved evaluation methodologies in AI development.

Introduction to NESTFUL

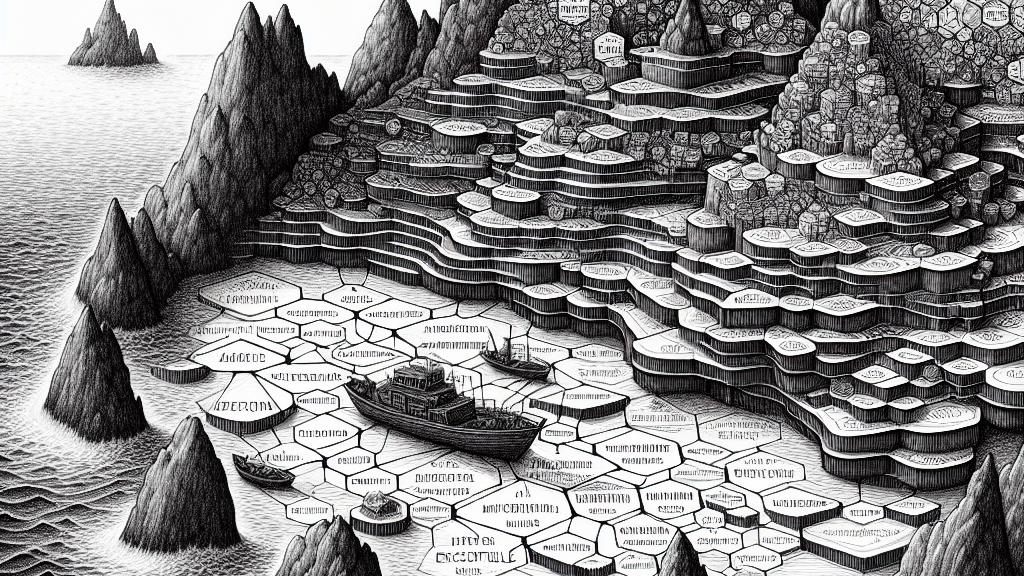

As the landscape of artificial intelligence evolves rapidly, the introduction of the NESTFUL benchmark marks a pivotal shift in how we assess large language models (LLMs). Imagine a detailed map guiding explorers through uncharted waters; NESTFUL offers just that, directing us toward the complexities of nested sequences of API calls. This benchmark's design encompasses a robust collection of 300 meticulously chosen samples, carefully split into executable and non-executable categories. Each example acts like a magnifying glass, allowing researchers to scrutinize how effectively LLMs navigate intricate setups where one API's output becomes another's input. This level of scrutiny is critical for anyone involved in the development of intelligent systems, promising to illuminate the path forward in a technology-driven world.

The Challenge of Nested API Calls

What truly sets NESTFUL apart is its ability to reveal the hidden flaws within even the most advanced models, much like a thorough inspection of a seemingly flawless diamond. While many LLMs can seamlessly manage straightforward API requests, they often encounter stumbling blocks when faced with the layered complexity of nested integrations. Picture a well-trained athlete who excels in sprinting but struggles when asked to perform a series of complex gymnastic routines. This analogy encapsulates the gap between apparent skills and real-world performance. In the NESTFUL framework, the straightforward becomes complex, exposing the shortcomings of numerous leading models. The intentional design of these tasks pushes the boundaries of what these technologies can achieve, compelling a critical reassessment of benchmarks that only scratch the surface. This gap is a clarion call for a paradigm shift—adapting our approaches to nurture AI systems capable of tackling multifaceted, real-world challenges.

Future Implications and Best Practices

The revelations from NESTFUL are not merely findings; they are a treasure trove of insights waiting to be unlocked for the future of AI technologies. As we navigate the turbulent waters of AI integration—akin to a captain steering a ship through stormy seas—the lessons from this benchmark become invaluable. Consider the implications of using powerful frameworks like PySpark; while they offer exciting possibilities for data manipulation, they also necessitate caution. Here, the concept of lazy evaluation isn't just a technical detail; it's a crucial strategy that, if ignored, could lead to unexpected pitfalls, much like a sailor who neglects to check the weather before setting out on their voyage. By embracing rigorous and contextually relevant evaluation methods, we can refine the reliability of AI applications across various sectors, including healthcare and finance. This commitment to enhancing assessment techniques is not merely a recommendation; it is essential for the successful development and deployment of AI technologies that must adapt to ever-changing user demands and industry standards.

Loading...