The Dark Side of Social Media: Why Are Teens Targeted by Violent Content?

Overview

- Social media algorithms are shockingly effective at exposing teens to violent and harmful content.

- Young users like Kai experience dramatic shifts from harmless videos to unsettling and graphic footage.

- Experts urgently highlight the potential long-term psychological effects of these algorithmic recommendations.

The Experience of Teens with Violent Content

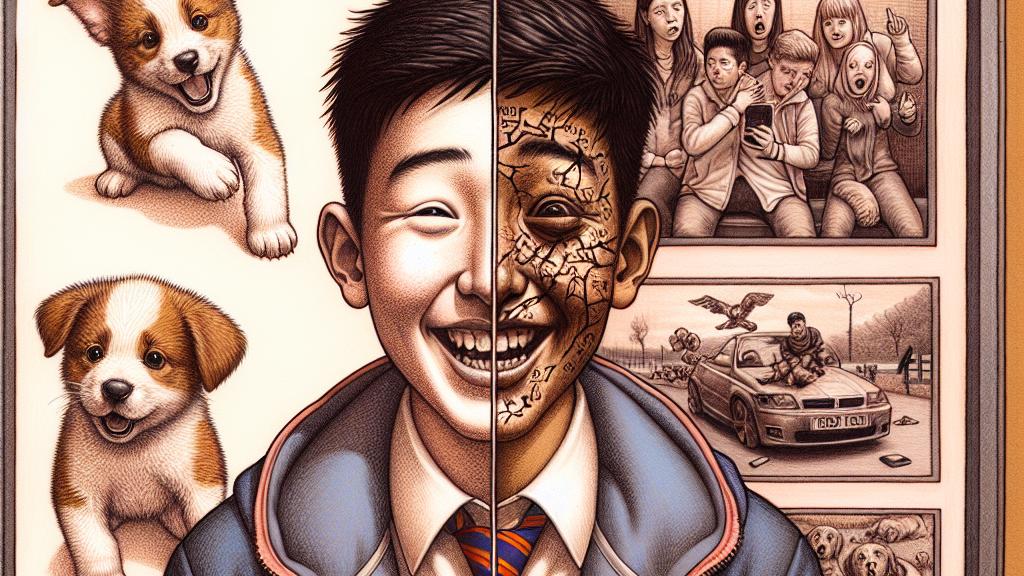

Imagine a typical day for 16-year-old Kai, a teenager in the UK scrolling through his social media feed. Initially, his screen lights up with adorable puppies frolicking in the sun, moments that evoke joy and laughter. Suddenly, it feels as if he’s plunged down a dark rabbit hole; those cheerful videos vanish, replaced by shocking scenes—an accident, a fight, and influencers casually promoting dangerous stereotypes about women. This isn’t just Kai’s story—it resonates with countless teens grappling with similar experiences. Analysts like Andrew Cowan reveal a troubling pattern: boys often see alarming content while girls are nudged toward entirely different types of engagement. This alarming disparity reveals a critical flaw in how social media algorithms operate and raises important questions about the responsibility of these platforms to protect their youngest users from harm.

Privacy and Content Moderation Challenges

Despite the comprehensive efforts by platforms like TikTok and Meta to implement AI-driven content moderation, significant gaps continue to exist. Consider this: Cowan points out that many disturbing videos go unchecked until they accumulate thousands of views, leaving vulnerable users, like Kai, exposed to distressing imagery at a young and impressionable age. It’s as if these algorithms, rather than safeguarding young minds, function as sirens luring them into an ocean of toxic content, all in the name of engagement. Teens find themselves ensnared by violent visuals, which can lead to a grim cycle of repeated exposure. This escalating issue not only endangers mental health but also underscores a pressing need for these social media giants to put the safety of their users ahead of advertising metrics—because in the end, what good is engagement if lives are at stake?

The Call for Change

Cowan’s call for change is not just hopeful; it is essential. Social media companies must evolve their algorithms, transforming them from tools of exploitation into instruments of protection. Imagine if, instead of mindlessly optimizing for clicks, these platforms truly listened to the voices of young users and distinguished between the content they want and the content they fear. Rather than approaching the problem with broad strokes, such as banning social media—which is impractical in a digital age—what if tools were introduced that enabled users to customize their interactions decisively? As Kai wisely suggests, understanding what teens choose to avoid is crucial. The ultimate aim should be clear: foster positive, uplifting connections that empower young people rather than drag them into the depths of despair. This shift is not just a possibility; it is a moral imperative, and it could very well shape the futures of countless young users navigating a perilous digital landscape.

Loading...