Investigation into Elon Musk's X for Possible Algorithm Problems

Overview

- French prosecutors are launching an in-depth probe into X's algorithms for alleged bias.

- Concerns about the distortion of political discourse are growing rapidly.

- X could face significant penalties as the European Union ramps up scrutiny.

Introduction to the Investigation

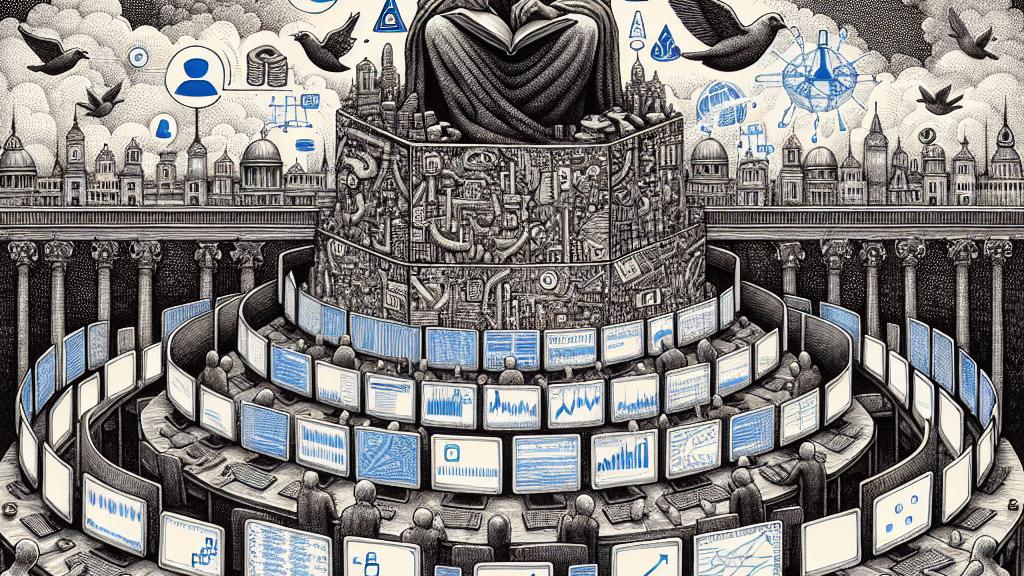

In a remarkable twist of events, French authorities have decided to investigate Elon Musk's social media platform, X, formerly known as Twitter. This investigation is driven by alarming allegations that X's algorithms are biased, leading to potential distortions in public discourse. The Paris public prosecutor's office has embarked on a meticulous examination of these algorithms, keen to understand how they might be influencing political conversations and public perceptions. As we navigate through this digital era, the implications of such inquiries are monumental; they challenge the very foundation of how social media shapes democracy itself.

Political Controversy Surrounding Algorithms

At the heart of this unfolding drama lies a critical and contentious issue: the intersection of technology and political integrity. Critics, including lawmakers and media pundits, assert that X's algorithms tend to favor far-right extremities, subsequently skewing the landscape of political discourse. A striking example is Elon Musk's overt support for the far-right Alternative für Deutschland (AfD) party in Germany. Many experts argue that such actions are not just endorsements but could also influence how political narratives are amplified or silenced on the platform. Imagine scrolling through your feed and noticing an influx of extreme viewpoints while moderate ones are buried beneath layers of digital noise. This frightening scenario poses serious questions about the role of social media in a well-functioning democracy. It’s essential for users to understand that the algorithms behind their favorite platforms hold immense power, often deciding which voices rise to prominence and which voices are suppressed.

EU Investigations and Their Regulatory Fallout

As this investigation heats up, the European Union has joined the fray, scrutinizing X with an eagle eye amid its commitment to enforcing the Digital Services Act. This landmark legislation is specifically designed to address and mitigate the harmful effects of online content, striving to create a safer digital environment. The EU's demands for internal documents from X are not merely procedural; they could unveil practices that might violate the law. If X is found to have mismanaged its algorithms, the consequences would be dire—ranging from hefty fines to severe restrictions on their operations within Europe. This situation underscores an escalating conflict between tech giants and regulatory requirements, illustrating a critical moment where accountability is of the utmost importance. In essence, the unfolding narrative is not just about algorithms; it’s about the ethical obligation of tech companies to ensure their platforms are fair and just, reflecting a true representation of societal values.

Loading...